Building an AI agent application to migrate a tech stack

This article is part of “Exploring Gen AI”. A series capturing Thoughtworks technologists' explorations of using gen ai technology for software development.

20 August 2024

Tech stack migrations are a promising use case for AI support. To understand the potential better, and to learn more about agent implementations, I used Microsoft’s open source tool autogen to try and migrate an Enzyme test to the React Testing Library.

What does “agent” mean?

Agents in this context are applications that are using a Large Language Model, but are not just displaying the model’s responses to the user, but are also taking actions autonomously, based on what the LLM tells them. There is also the hype term “multi agent”, which I think can mean anything from “I have multiple actions available in my application, and I call each of those actions an agent”, to “I have multiple applications with access to LLMs and they all interact with each other”. In this example, I have my application acting autonomously on behalf of an LLM, and I do have multiple actions - I guess that means I can say that this is “multi agent”?!

The goal

It’s quite common at the moment for teams to migrate their React component tests from Enzyme to the React Testing Library (RTL), as Enzyme is not actively maintained anymore, and RTL has been deemed the superior testing framework. I know of at least one team in Thoughtworks who has tried to use AI to help with that migration (unsuccessfully), and the Slack team have also published an interesting article about this problem. So I thought this is a nice relevant use case for my experiment, I looked up some documentation about how to migrate and picked a repository with Enzyme tests.

I first migrated a small and simple test for the EncounterHistory component myself, to understand what success looks like. The following were my manual steps, they are roughly what I want the AI to do. You don’t have to understand exactly what each step means, but they should give you an idea of the types of changes needed, and you’ll recognise these again later when I describe what the AI did:

- I added RTL imports to the test file

- I replaced Enzyme’s

mount()with RTL’srender(), and used RTL’s globalscreenobject in the assertion, instead of Enzyme’smount()return value - The test failed -> I realized I needed to change the selector function, selection by “div” selector doesn’t exist in RTL

- I added a

data-testidto the component code, and changed the test code to use that withscreen.getByTestId() - Tests passed!

How does Autogen work?

In Autogen, you can define a bunch of Agents, and then put them in a GroupChat together. Autogen’s GroupChatManager will manage the conversation between the agents, e.g. decide who should get to “speak” next. One of the members of the group chat is usually the UserProxyAgent, so that basically represents me, the developer who wants assistance. I can implement a bunch of functions in my code that can be registered with the agents as available tools, so I can say that I want to allow my user proxy to execute a function, and that I want to make the AssistantAgents aware of these functions, so they can tell the UserProxyAgent to execute them as needed. Each function needs to have some annotations on it that describes what they do in natural language, so that the LLM can decide if they are relevant.

For example, here is the function I wrote to run the tests (where engineer is the name of my AssistantAgent):

@user_proxy.register_for_execution()

@engineer.register_for_llm(description="Run the tests")

def run_tests(test_file_path: Annotated[str, "Relative path to the test file to run"]) -> Tuple[int, str]:

output_file = "jest-output-for-agent.json"

subprocess.run(

["./node_modules/.bin/jest", "--outputFile=" + output_file, "--json", test_file_path],

cwd=work_dir

)

with open(work_dir + "/" + output_file, "r") as file:

test_results = file.read()

return 0, test_results

Implementation

Using this Autogen documentation example as a starting point, I created a GroupChat with 2 agents, one AssistantAgent called engineer, and a UserProxyAgent. I implemented and registered 3 tool functions: see_file, modify_code, run_tests. Then I started the group chat with a prompt that describes some of the basics of how to migrate an Enzyme test, based on my experience doing it manually. (You can find a link to the full code example at the end of the article.)

Did it work?!

It worked - at least once… But it also failed a bunch of times, more so than it worked. In one of the first runs that worked, the model basically went through the same steps that I went through when I was doing this manually - maybe not surprisingly, because that was the basis of my prompt instructions.

How does it work?

This experiment helped me understand a lot better how “function calling” works, which is a key LLM capability to make agents work. It’s basically an LLM’s ability to accept function (aka tool) descriptions in a request, pick relevant functions based on the user’s prompt, and ask the application to give it the output of those function calls. For this to work, function calling needs to be implemented in the model’s API, but the model itself also needs to be good at “reasoning” about picking the relevant tools - apparently some models are better at doing this than others.

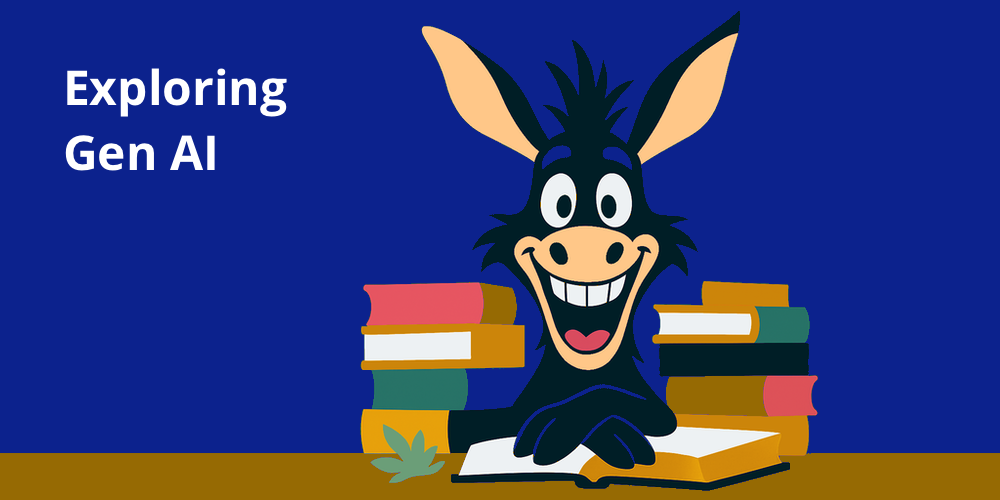

I traced the requests and responses to get a better idea of what’s happening. Here is a visualisation of that:

A few observations:

-

Note how the request gets bigger and bigger with each iteration. This is how LLMs work - with every new request, you need to give them the full context of what happened so far. The application that sends the requests to the LLM can of course implement something to truncate the history once it gets larger, basically find good ways to summarise it in a way that doesn’t drop important context, which is not trivial. For example, when you are having a conversation with ChatGPT, the longer the conversation gets, the requests to the model also get larger and larger. But the ChatGPT application starts truncating the context when it gets too large before sending it to the GPT model itself. In my case here, I don’t have an optimisation like that, so my request is just stacking up the information with each iteration. In fact, I ran into token limits a few times with this.

-

There is a LOT of back and forth. The overview above shows the 10 first requests of a failed run, the test was still failing at the end of that 10th request. Note how every time after a code modification, the application sends another request to confirm that this was done, to then get the next instruction from the LLM. I could have probably saved some back and forth by including the code contents directly in the initial prompt, but it was interesting to see this

see_fileaction play out. The successful run that I had took 10 requests in total:

| Iteration | Request (user_proxy:UserProxyAgent) |

Response (engineer:AssistantAgent) |

|---|---|---|

| 1 | Start with prompt (“You will be getting paths to a JavaScript Enzyme test file, […], here are instructions how to migrate: […]”) |

see_file for component code |

| 2 | Add content of component file | see_file for test code |

| 3 | Add content of test file | modify_code: add imports, use RTL’s render function and screen object |

| 4 | Confirm modification | run_tests |

| 5 | Add test results (“Unable to find an accessible element with the role “div””) |

modify_code: “We must add a test id to the component” |

| 6 | Confirm modification | modify_code: Use getTestById in test |

| 7 | Confirm modification | run_tests |

| 8 | Add test results (“expect(…).toBeInTheDocument is not a function”) |

modify_code: “Let’s try to solve this by importing @testing-library/jest-dom” |

| 9 | Confirm modification | run_tests |

| 10 | Add test results (numFailedTests = 0) |

TERMINATE (“The tests ran successfully […]. The change is now completed.”) |

- In a lot of the runs that failed, the code changes created basic syntax problems with brackets and such, because they were e.g. deleting too much code. This usually totally stumped the AI, understandably. It could be either the AI’s fault, for giving unsuitable change diff instructions. Or it could be the fault of the

modify_codefunction used, maybe it’s too simple. I wonder about the potential of using functions that don’t just take textual code diffs from the model, but instead offer functions that actually represent refactoring functionality of the IDE.

Conclusion

There is a lot of hype around developer agents right now, the most prominent examples being GitHub Copilot Workspace and Amazon Q’s developer agent, and you can find a bunch more on the SWE Bench website. But even hand-picked product demos often show examples where the AI’s solution doesn’t hold up to scrutiny. These agents still have quite a way to go until they can fulfill the promise of solving any kind of coding problem we throw at them. However, I do think it’s worth considering what the specific problem spaces are where agents can help us, instead of dismissing them altogether for not being the generic problem solvers they are misleadingly advertised to be. Tech stack migrations like the one described above seem like a great use case: Upgrades that are not quite straightforward enough to be solved by a mass refactoring tool, but that are also not a complete redesign that definitely requires a human. With the right optimisations and tool integrations, I can imagine useful agents in this space a lot sooner than the “solve any coding problem” moonshot.

Thanks to Vignesh Radhakrishnan, whose autogen experiments I used as a starting point.

latest article (Feb 17):

previous article:

next article: