How is GenAI different from other code generators?

This article is part of “Exploring Gen AI”. A series capturing Thoughtworks technologists' explorations of using gen ai technology for software development.

19 September 2023

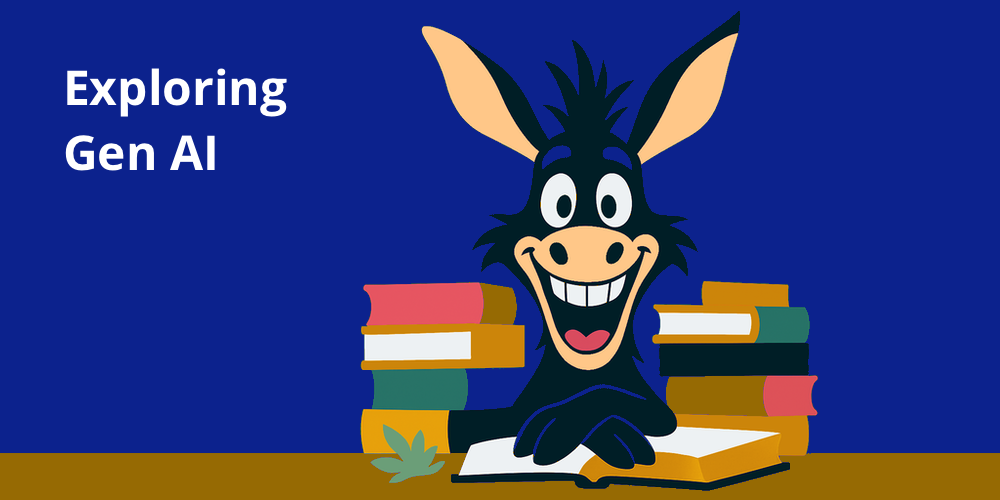

At the beginning of my career, I worked a lot in the space of Model-Driven Development (MDD). We would come up with a modeling language to represent our domain or application, and then describe our requirements with that language, either graphically or textually (customized UML, or DSLs). Then we would build code generators to translate those models into code, and leave designated areas in the code that would be implemented and customized by developers.

That style of code generation never quite took off though, except for some areas of embedded development. I think that’s because it sits at an awkward level of abstraction that in most cases doesn’t deliver a better cost-benefit ratio than other levels of abstraction, like frameworks or platforms.

What’s different about code generation with GenAI?

One of the key decisions we continuously take in our software engineering work is choosing the right abstraction levels to strike a good balance between implementation effort and the level of customizability and control we need for our use case. As an industry, we keep trying to raise the abstraction level to reduce implementation efforts and become more efficient. But there is a kind of invisible force field for that, limited by the level of control we need. Take the example of Low Code platforms: They raise the abstraction level and reduce development efforts, but as a result are most suitable for certain types of simple and straightforward applications. As soon as we need to do something more custom and complex, we hit the force field and have to take the abstraction level down again.

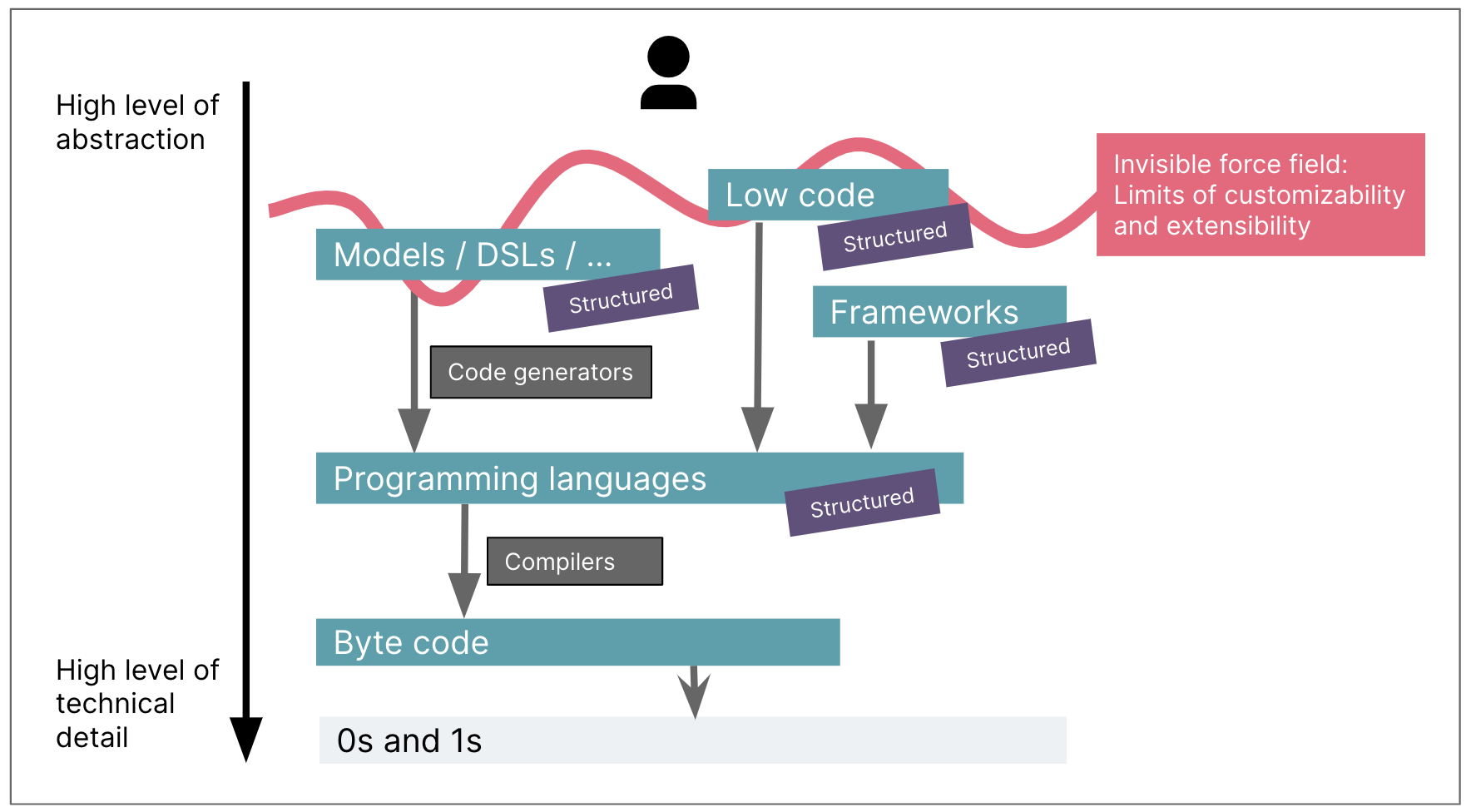

GenAI unlocks a whole new area of potential because it is not another attempt at smashing that force field. Instead, it can make us humans more effective on all the abstraction levels, without having to formally define structured languages and translators like compilers or code generators.

The higher up the abstraction level we go to apply GenAI, the lower the overall effort becomes to build a piece of software. To go back to the Low Code example, there are some impressive examples in that space which show how you can build full applications with just a few prompts. This comes with the same limitations of the Low Code abstraction level though, in terms of the use cases you can cover. If your use case hits that force field, and you need more control - you’ll have to go back to a lower abstraction level, and also back to smaller promptable units.

Do we need to rethink our abstraction levels?

One approach I take when I speculate about the potential of GenAI for software engineering is to think about the distance in abstraction between our natural language prompts, and our target abstraction levels. Google’s AppSheet demo that I linked above uses a very high level prompt (“I need to create an app that will help my team track travel requests […] fill a form […] requests should be sent to managers […]”) to create a functioning Low Code application. How many target levels down could we push with a prompt like that to get the same results, e.g. with Spring and React framework code? Or, how much more detailed (and less abstract) would the prompt have to be to achieve the same result in Spring and React?

If we want to better leverage GenAI’s potential for software engineering, maybe we need to rethink our conventional abstraction levels altogether, to build more “promptable” distances for GenAI to bridge.

Thanks to John Hearn, John King, Kevin Bralten, Mike Mason and Paul Sobocinski for their insightful review comments on this memo

latest article (Feb 17):

previous article:

next article: